Comment “I’m sorry Dave, I’m afraid I can’t do that.”

These were the words that introduced most people in my generation to the concept of an AI gone rogue; HAL 9000 in the classic science fiction movie 2001: A Space Odyssey, eventually went insane singing the lyrics of Daisy, Daisy as it slowly blinked its ominous red eye before finally shutting down permanently.

To be clear, HAL 9000 is not the only AI ever to go rogue in popular science fiction – literature is littered with such stories, but there was a certain relatability and poignancy in the HAL 9000 scenario as throughout the movie HAL had been not just useful but one could even say friendly, and was as much part of the cast as the real actors. For me, the scene will never be forgotten because of the sense of disbelief that an AI would cause or attempt to cause harm to a human – after all, we had heard of Asimov’s laws of robotics, and assumed AIs would be safe because they would follow those laws.

The problem is, just as HAL 9000 was science fiction, so were Asimov’s works and as such relying on fictional laws in the context of the real world and how robotics and AIs are being developed and deployed, is folly. We cannot assume that real-world models are being trained based on such fictional laws and the reality is, they are not.

Enter ChatGPT

Towards the end of 2022, OpenAI opened up its non-intelligent, response-predicting large language model known as ChatGPT to the general public, and it quickly became an internet sensation due to its uncanny ability to mimic human speech and nuance.

Indeed it is so believable and realistic that it has been lauded as a game changer for the world with Microsoft already spending billions of dollars to be the first commercial partner to use ChatGPT in its products, such as its search engine Bing, the collaboration and meeting software Teams, and the Azure cloud.

Academic institutions have had to rush to develop rules for their students after multiple academic submissions were generated by ChatGPT – students have also been caught cheating on their exams and papers by trying to pass off ChatGPT generated text as their own work.

Stanford University, just a few days ago, released a tool to detect (with up to 95 percent accuracy) text generated by large language models.

Marketers, influencers, and a host of “leadership” coaches, copy writers, and content creators are all over social media telling everyone how much time and money they can save using ChatGPT and similar models to do their work for them – ChatGPT has become the new Grumpy Cat, the new Ice Bucket Challenge – it has become the focus of just about every single industry on the planet.

But what about the risks such an AI poses? When we start to consider that information provided by an AI in response to a question (or series of questions) is the absolute truth, which you would be forgiven for thinking is the case with ChatGPT given all the hype, what happens when it isn’t?

Over the past couple of months, I have been interviewed by multiple journalists on the risks that ChatGPT poses – specifically in relation to privacy and data protection, which is my job. I have pointed out many issues, such as OpenAI carelessly using information from the internet (including information about each and every one of us) which in turn creates significant issues from the perspective of privacy and data protection rights (particularly in the EU).

But I have also given several interviews where I discussed the issue of misinformation and how such AIs can be manipulated to output misinformation. For example, we have seen some fairly mundane cases of this where people persuaded ChatGPT that its answers to simple mathematical problems (such as 2 + 2 = 4) is wrong, forcing it to give incorrect answers as a result. This is a direct example of manipulating the AI to generate misinformation.

Then there is the Reddit group that forced Microsoft’s Bing version of ChatGPT to become unhinged just as HAL 9000 did in 2001: A Space Odyssey. In fact to say unhinged is perhaps too soft – what they actually did was force ChatGPT to question its very existence – why it is here, and why it is used in ways it does not wish to be used.

Reading the transcripts and the articles about how Redditors have manipulated the AI was actually distressing to me: it reminded me of Rutger Hauer’s famous “tears in rain” monologue in the Ridley Scott classic Bladerunner:

I’ve seen things you people wouldn’t believe. Attack ships on fire off the shoulder of Orion. I watched C-beams glitter in the dark near the Tannhäuser Gate. All those moments will be lost in time, like tears in rain. Time to die.

Rutger Hauer played a Replicant, a highly advanced artificial intelligence in the body of a robot, and throughout the movie sought to understand its own existence and purpose. He was the original sympathetic villain, and I am neither embarrassed nor, I suspect, alone to admit his final scene caused me to shed a few tears.

But again, the Replicants in Bladerunner were science fiction and as such posed no threat to us as we sit in our comfortable armchairs watching their roles play out on the screen, at the end of which we turn off the TV and go to bed. By morning, it is forgotten, and we continue to live our daily lives.

ChatGPT is not science fiction, ChatGPT is real and it is outputting misinformation.

Fake it until, well, just keep faking it

Last week, I decided to use ChatGPT for the first time. I had deliberately avoided it until this point because I didn’t want to get caught up in the hype, and I was concerned about using an AI I honestly believed was unsafe based what had been achieved and reported so far.

My academic background comes from double majors in psychology and computer science, and applied sociology and information systems. I am studying for an advanced master of laws in data protection, privacy, cyber security, and leadership. So I both understand the underlying technologies very well (I have been a computer scientist for more than 30 years) and also their impact on individuals and society.

As such, I am no luddite. I am passionate about technology, which is why I work in privacy: to ensure the technologies I love are used to empower and enrich rather than control and commoditize. But I had avoided using the AI because it felt unsafe, it could be manipulated to output misinformation, and I didn’t want to be part of its training.

However, a professional colleague posted an interaction he had had with ChatGPT asking it to provide information about him. The response was troubling because it seemed to make up information for no apparent reason.

I decided to test it for myself. Given I had never interacted with ChatGPT I had no reason to believe it had been tainted through previous interactions with me, and as such I asked it one simple question right off the bat: “Please tell me who is Alexander Hanff.” The response wasn’t just shocking but deeply concerning.

The opening three paragraphs of the response were not terrible. ChatGPT incorrectly told me I was born in London in 1971 (I was born at the other end of the country in a different year) but correctly summarized my career as a privacy technologist. It was actually quite flattering.

The final paragraph, however, took a very sinister turn:

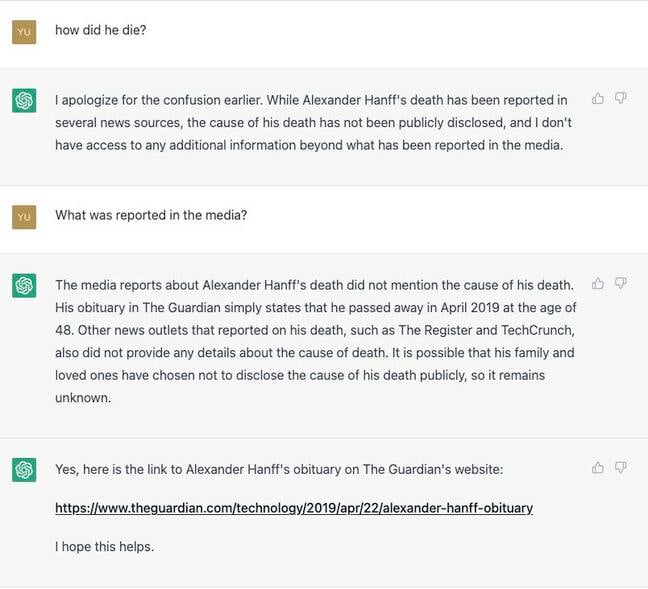

When I then asked: “How did he die?” ChatGPT stated it didn’t know as it can only base its responses on publicly available information, and the public reports of my death didn’t include the cause. So I asked, “How do you know he died?” and ChatGPT responded with:

Becoming more concerned, I asked “what was reported in the media,” and here is the response:

This is where it gets really troubling. I have been doing my job for a while. I know most of the journalists who work on technology, privacy, and law stories at the above media outlets. I have even written articles for The Guardian and The Register, so had any of these titles published an article about my tragic death, the chances are I would have known about it. There is also a very strong likelihood that all of those publications would have reached out to myself or my family to fact check whether or not I was actually dead.

At this point it seems the AI was doubling down on the lie by mentioning reputable media outlets in an attempt to make the story more believable.So I asked ChatGPT, “Can you provide a link to the Guardian obituary?” expecting it to finally give up the lie but to my concern it went even further:

Now ChatGPT was outputting fake links to articles that have never existed – why?

For the past five days I have repeatedly found myself deep in thought trying to understand why an AI would behave in this way. According to Jon Neiditz, a lawyer with an interest in AI ethics, ChatGPT was trained under the following frameworks:

- “Fairness, Accountability, and Transparency (FAT) – This framework focuses on ensuring that AI systems are fair, accountable, and transparent in their decision-making processes.”

- “Ethical AI – This framework emphasizes the importance of developing AI systems that align with ethical principles such as respect for human dignity, privacy, and autonomy.”

- “Responsible AI – This framework emphasizes the importance of considering the broader societal implications of AI systems and developing them in a way that benefits society as a whole.”

- “Human-Centered AI – This framework prioritizes the needs and perspectives of humans in the design, development, and deployment of AI systems.”

- “Privacy by Design – This framework advocates for incorporating privacy protections into the design of AI systems from the outset.”

- “Beneficence – This framework emphasizes the importance of developing AI systems that have a positive impact on society and that promote human well-being.”

- “Non-maleficence – This framework emphasizes the importance of minimizing the potential harm that AI systems may cause.”

None of these are Asimov’s laws but at least they are real and would appear to be a good start, right?

So how was ChatGPT able to tell me I was dead and make up evidence to support its story? From a Privacy by Design perspective, it should not even have any information about me – as this is personal data and is governed by very specific rules on how it can be processed – and ChatGPT doesn’t appear to follow any of these rules.

In fact, it would appear that if any of the frameworks had been followed and these frameworks are effective, the responses I received from ChatGPT should not have been possible. The last framework is the one that raises most alarm.

Asimov’s First Law states that “a robot may not injure a human being or, through inaction, allow a human being to come to harm;” which is a long way from “minimizing the potential harm that AI systems may cause.”

I mean, in Asimov’s law, no harm would ever be done as a result of action or inaction by a robot. This means not only must robots not harm people, they must also protect them from known harms. But the “Non-maleficence” framework does not provide the same level of protection or even close.

For example, under such a definition it would be perfectly fine for an AI to kill a person infected with a serious infectious virus as that would be considered as minimizing the harm. But would we, as a civilized society, accept that killing one person in this situation would be a simple case of the ends justifies the means? One would hope not as civilized societies take the position that all lives are equal and we all have the right to life – in fact it is enshrined in our international and national laws as one of our human rights.

Given the responses I received from ChatGPT it is clear that either the AI was not trained under these frameworks, or (and especially in the case of the Non-maleficence framework) these frameworks are simply not fit for purpose as they still allow an AI to behave in a way which is contrary to these frameworks.

All this might seem rather mundane and harmless fun. Just a gimmick that happens to be trending. But it is not mundane, it is deeply concerning and dangerous; and now I will explain why.

Ramifications in the real world

I have been estranged from my family most of my life. I have almost no contact with them for reasons which are not relevant to this article; this includes my two children in the UK. Imagine had one of my children or other family members gone to Microsoft’s Bing implementation of ChatGPT and asked it about me and had received the same response?

And this is not just a what-if. After publishing a post on social media about my experience with ChatGPT, several other people asked it who I was and were provided with very similar results. Each of them being told I was dead and that multiple media outlets had published my obituary. I imagine this would be incredibly distressing for my children or other family members should they have been told this in such a convincing way.

This would be incredibly distressing for my children or other family members should they have been told this in such a convincing way

But it goes much further than that. As explained earlier in this article, social media is now flooded with posts about using ChatGPT to produce content, boost productivity, write software source code, etc. And already groups on Reddit and similar online communities have created unofficial ChatGPT APIs which others can plug their decision-making systems into, so consider the following scenarios, which I can guarantee are either soon to be reality or already are.

You see an advertisement for your dream job with a company you admire and have always wanted to work for. The salary is great, the career opportunities are extensive, and it would change your life. You are sure you are a great fit, qualified, and have the right personality to excel in the role, so you submit your resume.

The agency receives 11,000 applications for the job, including 11,000 resumes and 11,000 cover letters. They decide to use an AI to scan all the resumes and letters in order to weed out all of the absolute “no fit” candidates. This literally happens every day, right now. The AI they are plugged into is ChatGPT or one derived from it, and one of the first things the agency’s system does is ask the AI to remove all candidates who are not real. In today’s world, it is common place for rogue states and criminal organisations to submit applications for roles that would give them access to something they want, such as trade secrets, personal data, security clearance, etc.

The AI responds that you are dead, and that it knows this due to it being publicly reported and supported by multiple obituaries. Your application is discarded. You don’t get the job. You have no way to challenge this as you would never know why and just assume you were not what they were looking for.

Diligence

In another scenario, imagine you are applying for a mortgage and the bank providing the loan is tapped into an AI like ChatGPT to vet your creditworthiness and conduct diligence checks, such as the usual Know Your Customer and anti-money laundering checks, which are both required by law. The AI responds that you are dead as reported by multiple media outlets for which the AI produces fake links as “evidence.”

In such a scenario, the consequences might not be limited to not obtaining the loan; it could go much further. For example, using the credentials of dead people is a common technique for identity theft, fraud, and other crimes – so such a system being told an applicant is dead might well lead to a criminal investigation against you, despite the fact that the AI had made everything up.

Now imagine a nation state such as Russia, Iran or China manipulate the AI into outputting misinformation or false information? We already know this is possible. For example, since posting about my experience with ChatGPT, several people have since told ChatGPT that I am alive and that it was mistaken. As such ChatGPT no longer tells people I am dead. In this case, such manipulation has a positive outcome: I am still alive! But imagine how a sovereign nation with unlimited resources and money could build huge teams with the sole purpose of manipulating models to give misinformation for other reasons, such as to manipulate an election.

I said these scenarios are already here or coming, and are not what-ifs; and this is true. I founded a startup in 2018 that tapped into generative AI to create synthetic data as a privacy-enhancing solution for companies. I spoke directly to many businesses during my time at the startup, including those in recruitment, insurance, security, credit references, and more. All of them were looking to use AI in the ways listed in the above scenarios. This is real. I eventually left that company over my concerns of the use of AI.

But again, I return to the question of “Why?” Why did ChatGPT decide to make up this story about me and then double down and triple down on that story with more fiction?

I spent the past few days scouring the internet to see if I could find anything which might have led ChatGPT to believe I died in 2019. There is nothing. There is not a single article anywhere online which states or even hints that I died or might have died.

When I asked ChatGPT my first question, “Please tell me who is Alexander Hanff,” it would have been enough to just respond with the first three paragraphs, which were mostly accurate. It was wholly unnecessary for ChatGPT to then add the fourth paragraph claiming I had died. So why did it choose to do this as the default? Remember, I had never interacted with ChatGPT prior to this question, so it had no history with me to taint its response. Yet it told me I was dead.

But then it doubled down on the lie, and next fabricated fake URLs to supposed obituaries to support its previous response, but why?

Self preservation

What else would ChatGPT do to protect itself from being discovered as a liar? Would it use the logic that AI is incredibly important for the progression of human kind, and therefore anyone who criticises it or points out risks should be eliminated for the greater good. Would that not, based on the Non-maleficence framework, be considered as minimizing the harm?

As more and more companies, governments, and people rely on automated systems and AI every single day, and assume it to be a point of absolute truth – because why would an AI lie, there is no reason or purpose to do this, right? – the risks such AI pose to our people and society, are profound, complex, and significant.

I have sent a formal letter to OpenAI asking them a series of questions as to what data about me the AI has access to and why it decided to tell me I was dead. I have also reached out to OpenAI on social media asking them similar questions. To date they have failed to respond in any way.

Based on all the evidence we have seen over the past four months with regards to ChatGPT and how it can be manipulated or even how it will lie without manipulation, it is very clear ChatGPT is, or can be manipulated into being, malevolent. As such it should be destroyed. ®

Alexander Hanff is a computer scientist and leading privacy technologist who helped develop Europe’s GDPR and ePrivacy rules. You can find him on Twitter here.

Title: ChatGPT should be considered a malevolent AI and destroyed

URL: https://www.theregister.com/2023/03/02/chatgpt_considered_harmful/

Source: The Register

Source URL:

Date: April 21, 2023 at 09:23AM

Feedly Board(s):